LeR complete examples

Please refer to the documentation for more details.

TOC:

Short lensed or unlensed BBH example with three detectors

Simulation of the GW CBC population (unlensed)

Calculation of unlensed rates

Simulation of the GW CBC population (lensed)

Calculation of lensed rates

Comparison of the rates

Getting generated parameters

Manually changing paramters and recompute the rates

Plot the generated parameters

Using custom functions and parameters

Defining custom functions and parameters

LeR initialization with custom functions and parameters

Sampling (Unlensed)

Sampling (Lensed)

Rate calculation and comparison

Uning available model functions

Comparison of mass distribution model

Comparison of Axis-ratio model of the lensing galaxy

Generating particular number of detectable events

Unlensed case

Lensed case

Using custom detection criteria

Defining custom detection criteria

Testing the custom detection criteria

Using custom detection criteria with LeR

Analysis: SNR (with ANN) + SNR recalculation (inner product)

Short lensed or unlensed BBH example with three detectors.

This part of the notebook is a short example to simulate lensed and unlensed binary black hole mergers and calculate their rates (\(yr^{-1}\)) and finally compare the results.

All the outputs are saved in the

ler_datadirectory by default.lerpackage heavily relies on multiprocessing capability of python, and some of the multiprocessing settings are set by default when calling the package. If issue arises, please raise issue in github.ler.issue.

[1]:

# call the LeR class

from ler.rates import LeR

Setting multiprocessing start method to 'fork'

You can check for more details on

LeRclass from either the documentation or by callinghelp(LeR)orLeR?(in the Jupyter Notebook).Depending on the number of CPU cores used and microprocessor architecture,

LeRclass initialization will take some time to generate interpolation data for the models. Please be patient. e.g. it takes 1m 56s for 4 cores in M2 pro Aplle intel chip 16GB RAM.The generated interpolation data is saved and will be call automatically when the same model is used again. If user wants to regenerate the interpolation data, please set

create_new_interpolator=Truein theLeRclass initialization. Or you can simply delete theinterpolator_pickledirectory or specific path in theinterpolator_pickledirectory.if you don’t want the models and its parameters to print. Default is True.

ler = LeR(verbose=False)set ‘npool’ according to your machine’s available CPU cores. Default is 4.

to check no. of cores,

import multiprocessing as mp print(mp.cpu_count())

[2]:

# LeR initialization with default arguments

ler = LeR()

z_to_luminosity_distance interpolator will be loaded from ./interpolator_pickle/z_to_luminosity_distance/z_to_luminosity_distance_0.pickle

differential_comoving_volume interpolator will be loaded from ./interpolator_pickle/differential_comoving_volume/differential_comoving_volume_0.pickle

merger_rate_density interpolator will be loaded from ./interpolator_pickle/merger_rate_density/merger_rate_density_0.pickle

z_to_Dc interpolator will be loaded from ./interpolator_pickle/z_to_Dc/z_to_Dc_0.pickle

Dc_to_z interpolator will be loaded from ./interpolator_pickle/Dc_to_z/Dc_to_z_0.pickle

angular_diameter_distance interpolator will be loaded from ./interpolator_pickle/angular_diameter_distance/angular_diameter_distance_0.pickle

differential_comoving_volume interpolator will be loaded from ./interpolator_pickle/differential_comoving_volume/differential_comoving_volume_2.pickle

velocity_dispersion_ewoud interpolator will be loaded from ./interpolator_pickle/velocity_dispersion_ewoud/velocity_dispersion_ewoud_0.pickle

optical_depth_SIE_hemanta interpolator will be loaded from ./interpolator_pickle/optical_depth_SIE_hemanta/optical_depth_SIE_hemanta_0.pickle

input_params_image {'n_min_images': 2, 'n_max_images': 4, 'geocent_time_min': 1126259462.4, 'geocent_time_max': 1756979462.4, 'lens_model_list': ['EPL_NUMBA', 'SHEAR'], 'source_priors': None, 'source_priors_params': None, 'spin_zero': True, 'spin_precession': False}

luminosity_distance_to_z interpolator will be loaded from ./interpolator_pickle/luminosity_distance_to_z/luminosity_distance_to_z_0.pickle

psds not given. Choosing bilby's default psds

Interpolator will be loaded for L1 detector from ./interpolator_pickle/L1/partialSNR_dict_0.pickle

Interpolator will be loaded for H1 detector from ./interpolator_pickle/H1/partialSNR_dict_0.pickle

Interpolator will be loaded for V1 detector from ./interpolator_pickle/V1/partialSNR_dict_0.pickle

# LeR set up params:

npool = 4,

z_min = 0.0,

z_max = 10.0,

event_type = 'BBH',

size = 100000,

batch_size = 50000,

cosmology = LambdaCDM(H0=70.0 km / (Mpc s), Om0=0.3, Ode0=0.7, Tcmb0=0.0 K, Neff=3.04, m_nu=None, Ob0=None),

snr_finder = <bound method GWSNR.snr of <gwsnr.gwsnr.GWSNR object at 0x11251f9d0>>,

json_file_names = {'ler_params': 'ler_params.json', 'unlensed_param': 'unlensed_param.json', 'unlensed_param_detectable': 'unlensed_param_detectable.json', 'lensed_param': 'lensed_param.json', 'lensed_param_detectable': 'lensed_param_detectable.json'},

interpolator_directory = './interpolator_pickle',

ler_directory = './ler_data',

# LeR also takes CBCSourceParameterDistribution class params as kwargs, as follows:

source_priors = {'merger_rate_density': 'merger_rate_density_bbh_popI_II_oguri2018', 'source_frame_masses': 'binary_masses_BBH_popI_II_powerlaw_gaussian', 'zs': 'sample_source_redshift', 'geocent_time': 'sampler_uniform', 'ra': 'sampler_uniform', 'dec': 'sampler_cosine', 'phase': 'sampler_uniform', 'psi': 'sampler_uniform', 'theta_jn': 'sampler_sine'},

source_priors_params = {'merger_rate_density': {'R0': 2.39e-08, 'b2': 1.6, 'b3': 2.0, 'b4': 30}, 'source_frame_masses': {'mminbh': 4.98, 'mmaxbh': 112.5, 'alpha': 3.78, 'mu_g': 32.27, 'sigma_g': 3.88, 'lambda_peak': 0.03, 'delta_m': 4.8, 'beta': 0.81}, 'zs': None, 'geocent_time': {'min_': 1238166018, 'max_': 1269702018}, 'ra': {'min_': 0.0, 'max_': 6.283185307179586}, 'dec': None, 'phase': {'min_': 0.0, 'max_': 6.283185307179586}, 'psi': {'min_': 0.0, 'max_': 3.141592653589793}, 'theta_jn': None},

spin_zero = True,

spin_precession = False,

create_new_interpolator = False,

# LeR also takes LensGalaxyParameterDistribution class params as kwargs, as follows:

lens_type = 'epl_shear_galaxy',

lens_functions = {'strong_lensing_condition': 'rjs_with_cross_section_SIE', 'optical_depth': 'optical_depth_SIE_hemanta', 'param_sampler_type': 'sample_all_routine'},

lens_priors = {'source_redshift_sl': 'strongly_lensed_source_redshifts', 'lens_redshift': 'lens_redshift_SDSS_catalogue', 'velocity_dispersion': 'velocity_dispersion_ewoud', 'axis_ratio': 'axis_ratio_rayleigh', 'axis_rotation_angle': 'axis_rotation_angle_uniform', 'external_shear': 'shear_norm', 'density_profile_slope': 'density_profile_slope_normal', 'source_parameters': 'sample_gw_parameters'},

lens_priors_params = {'source_redshift_sl': None, 'lens_redshift': None, 'velocity_dispersion': None, 'axis_ratio': {'q_min': 0.2, 'q_max': 1.0}, 'axis_rotation_angle': {'phi_min': 0.0, 'phi_max': 6.283185307179586}, 'external_shear': {'scale': 0.05}, 'density_profile_slope': {'mean': 2.0, 'std': 0.2}, 'source_parameters': None},

# LeR also takes ImageProperties class params as kwargs, as follows:

n_min_images = 2,

n_max_images = 4,

geocent_time_min = 1126259462.4,

geocent_time_max = 1756979462.4,

lens_model_list = ['EPL_NUMBA', 'SHEAR'],

# LeR also takes gwsnr.GWSNR params as kwargs, as follows:

mtot_min = 2.0,

mtot_max = 184.98599853446768,

ratio_min = 0.1,

ratio_max = 1.0,

mtot_resolution = 500,

ratio_resolution = 50,

sampling_frequency = 2048.0,

waveform_approximant = 'IMRPhenomD',

minimum_frequency = 20.0,

snr_type = 'interpolation',

psds = [PowerSpectralDensity(psd_file='None', asd_file='/Users/phurailatpamhemantakumar/anaconda3/envs/ler/lib/python3.10/site-packages/bilby/gw/detector/noise_curves/aLIGO_O4_high_asd.txt'), PowerSpectralDensity(psd_file='None', asd_file='/Users/phurailatpamhemantakumar/anaconda3/envs/ler/lib/python3.10/site-packages/bilby/gw/detector/noise_curves/aLIGO_O4_high_asd.txt'), PowerSpectralDensity(psd_file='None', asd_file='/Users/phurailatpamhemantakumar/anaconda3/envs/ler/lib/python3.10/site-packages/bilby/gw/detector/noise_curves/AdV_asd.txt')],

ifos = None,

interpolator_dir = './interpolator_pickle',

gwsnr_verbose = False,

multiprocessing_verbose = True,

mtot_cut = True,

For reference, the chosen source parameters are listed below:

merger_rate_density = 'merger_rate_density_bbh_popI_II_oguri2018'

merger_rate_density_params = {'R0': 2.39e-08, 'b2': 1.6, 'b3': 2.0, 'b4': 30}

source_frame_masses = 'binary_masses_BBH_popI_II_powerlaw_gaussian'

source_frame_masses_params = {'mminbh': 4.98, 'mmaxbh': 112.5, 'alpha': 3.78, 'mu_g': 32.27, 'sigma_g': 3.88, 'lambda_peak': 0.03, 'delta_m': 4.8, 'beta': 0.81}

geocent_time = 'sampler_uniform'

geocent_time_params = {'min_': 1238166018, 'max_': 1269702018}

ra = 'sampler_uniform'

ra_params = {'min_': 0.0, 'max_': 6.283185307179586}

dec = 'sampler_cosine'

dec_params = None

phase = 'sampler_uniform'

phase_params = {'min_': 0.0, 'max_': 6.283185307179586}

psi = 'sampler_uniform'

psi_params = {'min_': 0.0, 'max_': 3.141592653589793}

theta_jn = 'sampler_sine'

theta_jn_params = None

For reference, the chosen lens related parameters and functions are listed below:

lens_redshift = 'lens_redshift_SDSS_catalogue'

lens_redshift_params = None

velocity_dispersion = 'velocity_dispersion_ewoud'

velocity_dispersion_params = None

axis_ratio = 'axis_ratio_rayleigh'

axis_ratio_params = {'q_min': 0.2, 'q_max': 1.0}

axis_rotation_angle = 'axis_rotation_angle_uniform'

axis_rotation_angle_params = {'phi_min': 0.0, 'phi_max': 6.283185307179586}

shear = 'shear_norm'

shear_params = {'scale': 0.05}

density_profile_slope = 'density_profile_slope_normal'

density_profile_slope_params = {'mean': 2.0, 'std': 0.2}

Lens functions:

strong_lensing_condition = 'rjs_with_cross_section_SIE'

optical_depth = 'optical_depth_SIE_hemanta'

Simulation of the GW CBC population (unlensed).

this will generate a json file with the simulated population parameters.

by default 100,000 events will be sampled with batches of 50,000. For more realistic results, keep batch_size=50000 and size>=1000000.

results will be saved in the same directory as json file.

resume=Truewill resume the simulation from the last saved batch.if you dont’t need to save the file at the end of each batch sampling, set

save_batch=False(default).

[8]:

# ler.batch_size = 100000 # for faster computation

unlensed_param = ler.unlensed_cbc_statistics(size=100000, resume=False, save_batch=False)

unlensed params will be store in ./ler_data/unlensed_param.json

chosen batch size = 50000 with total size = 100000

There will be 2 batche(s)

Batch no. 1

sampling gw source params...

calculating snrs...

Batch no. 2

sampling gw source params...

calculating snrs...

saving all unlensed parameters in ./ler_data/unlensed_param.json

Calculation of unlensed rates.

[9]:

rate_unlensed, unlensed_param_detectable = ler.unlensed_rate()

Getting unlensed_param from json file ./ler_data/unlensed_param.json...

given detectability_condition == 'step_function'

total unlensed rate (yr^-1): 432.7257078089194

number of simulated unlensed detectable events: 418

number of simulated all unlensed events: 100000

storing detectable params in ./ler_data/unlensed_param_detectable.json

[10]:

# look for parameters names included in the generated data

print(unlensed_param_detectable.keys())

dict_keys(['zs', 'geocent_time', 'ra', 'dec', 'phase', 'psi', 'theta_jn', 'luminosity_distance', 'mass_1_source', 'mass_2_source', 'mass_1', 'mass_2', 'L1', 'H1', 'V1', 'snr_net'])

Simulation of the GW CBC population (lensed).

this will generate a json file with the simulated source parameters, lensed parameters and image parameters.

if the program hangs dues to memory issues,

try reducing the batch size.

and you can resume from the last saved batch. But you need to set save_batch=True.

save_batch=False(default) will make the code run faster but you will not have the results saved in the end of each batch.

[11]:

# ler.batch_size = 50000

lensed_param = ler.lensed_cbc_statistics(size=100000, resume=False, save_batch=False)

lensed params will be store in ./ler_data/lensed_param.json

chosen batch size = 50000 with total size = 100000

There will be 2 batche(s)

Batch no. 1

sampling lensed params...

solving lens equations...

100%|███████████████████████████████████████████████████████| 50000/50000 [00:11<00:00, 4376.58it/s]

calculating snrs...

Batch no. 2

sampling lensed params...

solving lens equations...

100%|███████████████████████████████████████████████████████| 50000/50000 [00:11<00:00, 4262.07it/s]

Invalid sample found. Resampling 1 lensed events...

solving lens equations...

100%|█████████████████████████████████████████████████████████████████| 1/1 [00:00<00:00, 11.91it/s]

calculating snrs...

saving all lensed parameters in ./ler_data/lensed_param.json

Calculation of lensed rates.

[12]:

ler.lensed_rate();

Getting lensed_param from json file ./ler_data/lensed_param.json...

given detectability_condition == step_function

total lensed rate (yr^-1): 1.1690813631723105

number of simulated lensed detectable events: 515

number of simulated all lensed events: 100000

storing detectable params in ./ler_data/lensed_param_detectable.json

Comparison of the rates.

ler.rate_ratiofunction by default assumes data generated and stored in previous steps. Otherwise you have to provide the data or the file path.

[13]:

ler.rate_ratio();

unlensed_rate: 432.7257078089194

lensed_rate: 1.1690813631723105

ratio: 370.1416526175006

if you want to calculate the rates, and compare it at the same time, run the following command.

rate_ratio, unlensed_param_detectable, lensed_param_detectable =ler.rate_comparison_with_rate_calculation()

Note: The above example is for spin-less systems. IMRPhenomD (spin-less) is the default waveform approximant. To see LeR configuration, run

ler.print_all_params()

Getting generated parameters.

what are the saved files?

all saved files are in the

ler_datadirectory by default.

[18]:

#ler.json_file_names, ler.ler_directory

print(f"ler directory: {ler.ler_directory}")

print(f"ler json file names: {ler.json_file_names}")

ler directory: ./ler_data

ler json file names: {'ler_params': 'ler_params.json', 'unlensed_param': 'unlensed_param.json', 'unlensed_param_detectable': 'unlensed_param_detectable.json', 'lensed_param': 'lensed_param.json', 'lensed_param_detectable': 'lensed_param_detectable.json'}

you can use ler attributes or call the relevant json file

[14]:

# the generated parameters are not store in the ler instance, but in the json files

# you can access the generated parameters from the json files as shown below

unlensed_param_detectable = ler.unlensed_param_detectable

lensed_param_detectable = ler.lensed_param_detectable

# unlensed_param = ler.unlensed_param

# lensed_param = ler.lensed_param

# print keys of the generated parameters

print(f"unlensed_param_detectable keys: {unlensed_param_detectable.keys()}")

print(f"lensed_param_detectable keys: {lensed_param_detectable.keys()}")

unlensed_param_detectable keys: dict_keys(['zs', 'geocent_time', 'ra', 'dec', 'phase', 'psi', 'theta_jn', 'luminosity_distance', 'mass_1_source', 'mass_2_source', 'mass_1', 'mass_2', 'L1', 'H1', 'V1', 'snr_net'])

lensed_param_detectable keys: dict_keys(['zl', 'zs', 'sigma', 'q', 'theta_E', 'phi', 'e1', 'e2', 'gamma1', 'gamma2', 'gamma', 'geocent_time', 'ra', 'dec', 'phase', 'psi', 'theta_jn', 'luminosity_distance', 'mass_1_source', 'mass_2_source', 'mass_1', 'mass_2', 'x0_image_positions', 'x1_image_positions', 'magnifications', 'time_delays', 'image_type', 'n_images', 'effective_luminosity_distance', 'effective_geocent_time', 'snr_net', 'L1', 'H1', 'V1'])

here is another way to access the generated parameters from the json files

get_param_from_jsonandload_jsonfunctions fromler.utilssub-module will be use in the following examples.get_param_from_jsonwill automatically assume the dictionary value is a list and will be converted to a numpy array, whileload_jsonwill return the json file’s dictionary as it is.get_param_from_jsonwill recognize numpy specific data types (e.g. nan and inf) and convert them to numpy arrays.to save data as dictionary, use

save_jsonorappend_jsonfunction fromler.utilssub-module.all function usage of

ler.utilssub-module can be found in the ler.git example.

[16]:

from ler.utils import get_param_from_json

unlensed_param_detectable = get_param_from_json(ler.ler_directory+'/'+ler.json_file_names['unlensed_param_detectable'])

lensed_param_detectable = get_param_from_json(ler.ler_directory+'/'+ler.json_file_names['lensed_param_detectable'])

# unlensed_param = get_param_from_json(ler.ler_directory+'/'+ler.json_file_names['unlensed_param'])

# lensed_param = get_param_from_json(ler.ler_directory+'/'+ler.json_file_names['lensed_param'])

# print keys of the generated parameters

print(f"unlensed_param_detectable keys: {unlensed_param_detectable.keys()}")

print(f"lensed_param_detectable keys: {lensed_param_detectable.keys()}")

unlensed_param_detectable keys: dict_keys(['zs', 'geocent_time', 'ra', 'dec', 'phase', 'psi', 'theta_jn', 'luminosity_distance', 'mass_1_source', 'mass_2_source', 'mass_1', 'mass_2', 'L1', 'H1', 'V1', 'snr_net'])

lensed_param_detectable keys: dict_keys(['zl', 'zs', 'sigma', 'q', 'theta_E', 'phi', 'e1', 'e2', 'gamma1', 'gamma2', 'gamma', 'geocent_time', 'ra', 'dec', 'phase', 'psi', 'theta_jn', 'luminosity_distance', 'mass_1_source', 'mass_2_source', 'mass_1', 'mass_2', 'x0_image_positions', 'x1_image_positions', 'magnifications', 'time_delays', 'image_type', 'n_images', 'effective_luminosity_distance', 'effective_geocent_time', 'snr_net', 'L1', 'H1', 'V1'])

Note: all LeR initialization parameters and some important results are saved in a json file.

[21]:

from ler.utils import load_json

# ler_params = load_json(ler.ler_directory+"/"+ler.json_file_names["ler_params"])

ler_params = load_json('ler_data/ler_params.json')

print(ler_params.keys())

print("detectable_unlensed_rate_per_year: ", ler_params['detectable_unlensed_rate_per_year'])

print("detectable_lensed_rate_per_year; ",ler_params['detectable_lensed_rate_per_year'])

print("rate_ratio: ",ler_params['rate_ratio'])

dict_keys(['npool', 'z_min', 'z_max', 'size', 'batch_size', 'cosmology', 'snr_finder', 'json_file_names', 'interpolator_directory', 'gw_param_sampler_dict', 'snr_calculator_dict', 'detectable_unlensed_rate_per_year', 'detectability_condition', 'detectable_lensed_rate_per_year', 'rate_ratio'])

detectable_unlensed_rate_per_year: 420.30296021631887

detectable_lensed_rate_per_year; 1.094440169697147

rate_ratio: 384.0346616047772

Plot the generated parameters

Below I plot the generated redshift distributions of the lensed and unlensed populations and comapre them.

[22]:

# quick plot

import matplotlib.pyplot as plt

from ler.utils import plots as lerplt

# plotting the distribution of event parameters

# comparision of redshift distribution for lensed and unlensed events

# param_dict can be either a dictionary or a json file name that contains the parameters

plt.figure(figsize=(6, 4))

# for unlensed case

lerplt.param_plot(

param_name='zs',

param_dict='ler_data/unlensed_param_detectable.json',

plot_label='zs (bbh-detectable)',

histogram=False,

kde=True,

kde_bandwidth=0.5,

)

lerplt.param_plot(

param_name='zs',

param_dict='ler_data/unlensed_param.json',

plot_label='zs (bbh-all)',

histogram=False,

kde=True,

)

# for lensed case

lerplt.param_plot(

param_name='zs',

param_dict='ler_data/lensed_param_detectable.json',

plot_label='zs (bbh-lensed-detectable)',

histogram=False,

kde=True,

kde_bandwidth=0.5,

)

lerplt.param_plot(

param_name='zs',

param_dict='ler_data/lensed_param.json',

plot_label='zs (bbh-lensed-all)',

histogram=False,

kde=True,

)

plt.xlim(0.001,8)

plt.grid(alpha=0.4)

plt.xlabel('Source redshift (zs)')

plt.ylabel('Probability Density')

plt.show()

getting gw_params from json file ler_data/unlensed_param_detectable.json...

getting gw_params from json file ler_data/unlensed_param.json...

getting gw_params from json file ler_data/lensed_param_detectable.json...

getting gw_params from json file ler_data/lensed_param.json...

Using custom functions and parameters.

lerallows internal model functions to be change with custom functions.It also allows to change the default parameters of the existing model functions.

First let’s look at what are the input parameters available for LeR class. The input paramters can divided into five categories

ler.LeR set up params

ler.CBCSourceParameterDistribution set up params (as kwargs)

ler.LensGalaxyParameterDistribution set up params (as kwargs)

ler.ImageProperties set up params (as kwargs)

gwsnr.GWSNR set up params (as kwargs)

Complete LeR initialization is shown below,

[3]:

# # below is the example of LeR initialization with all the arguments.

from ler.rates import LeR

import numpy as np

import matplotlib.pyplot as plt

from astropy.cosmology import LambdaCDM

# # Uncomment the below code if you need to change the default arguments.

# ler = LeR(

# # LeR setup arguments

# npool=4, # number of processors to use

# z_min=0.0, # minimum redshift

# z_max=10.0, # maximum redshift

# event_type='BBH', # event type

# size=100000, # number of events to simulate

# batch_size=50000, # batch size

# cosmology=LambdaCDM(H0=70, Om0=0.3, Ode0=0.7), # cosmology

# snr_finder=None, # snr calculator from 'gwsnr' package will be used

# pdet_finder=None, # will not be consider unless specified

# list_of_detectors=None, # list of detectors that will be considered when calculating snr or pdet for lensed events. if None, all the detectors from 'gwsnr' will be considered

# json_file_names=dict(

# ler_params="ler_params.json", # to store initialization parameters and important results

# unlensed_param="unlensed_param.json", # to store all unlensed events

# unlensed_param_detectable="unlensed_param_detectable.json", # to store only detectable unlensed events

# lensed_param="lensed_param.json", # to store all lensed events

# lensed_param_detectable="lensed_param_detectable.json"), # to store only detectable lensed events

# interpolator_directory='./interpolator_pickle', # directory to store the interpolator pickle files. 'ler' uses interpolation to get values of various functions to speed up the calculations (relying on numba njit).

# ler_directory='./ler_data', # directory to store all the outputs

# verbose=False, # if True, will print all information at initialization

# # CBCSourceParameterDistribution class arguments

# source_priors= {'merger_rate_density': 'merger_rate_density_bbh_popI_II_oguri2018', 'source_frame_masses': 'binary_masses_BBH_popI_II_powerlaw_gaussian', 'zs': 'sample_source_redshift', 'geocent_time': 'sampler_uniform', 'ra': 'sampler_uniform', 'dec': 'sampler_cosine', 'phase': 'sampler_uniform', 'psi': 'sampler_uniform', 'theta_jn': 'sampler_sine'},

# source_priors_params= {'merger_rate_density': {'R0': 2.39e-08, 'b2': 1.6, 'b3': 2.0, 'b4': 30}, 'source_frame_masses': {'mminbh': 4.98, 'mmaxbh': 112.5, 'alpha': 3.78, 'mu_g': 32.27, 'sigma_g': 3.88, 'lambda_peak': 0.03, 'delta_m': 4.8, 'beta': 0.81}, 'zs': None, 'geocent_time': {'min_': 1238166018, 'max_': 1269702018}, 'ra': {'min_': 0.0, 'max_': 6.283185307179586}, 'dec': None, 'phase': {'min_': 0.0, 'max_': 6.283185307179586}, 'psi': {'min_': 0.0, 'max_': 3.141592653589793}, 'theta_jn': None},

# spin_zero= True, # if True, spins will be set to zero

# spin_precession= False, # if True, spins will be precessing

# # LensGalaxyParameterDistribution class arguments

# lens_type = 'epl_shear_galaxy',

# lens_functions = {'strong_lensing_condition': 'rjs_with_cross_section_SIE', 'optical_depth': 'optical_depth_SIE_hemanta', 'param_sampler_type': 'sample_all_routine'},

# lens_priors = {'source_redshift_sl': 'strongly_lensed_source_redshifts', 'lens_redshift': 'lens_redshift_SDSS_catalogue', 'velocity_dispersion': 'velocity_dispersion_ewoud', 'axis_ratio': 'axis_ratio_rayleigh', 'axis_rotation_angle': 'axis_rotation_angle_uniform', 'external_shear': 'shear_norm', 'density_profile_slope': 'density_profile_slope_normal', 'source_parameters': 'sample_gw_parameters'},

# lens_priors_params = {'source_redshift_sl': None, 'lens_redshift': None, 'velocity_dispersion': None, 'axis_ratio': {'q_min': 0.2, 'q_max': 1.0}, 'axis_rotation_angle': {'phi_min': 0.0, 'phi_max': 6.283185307179586}, 'external_shear': {'scale': 0.05}, 'density_profile_slope': {'mean': 2.0, 'std': 0.2}, 'source_parameters': None},

# # ImageProperties class arguments

# n_min_images = 2,

# n_max_images = 4,

# geocent_time_min = 1126259462.4,

# geocent_time_max = 1756979462.4,

# lens_model_list = ['EPL_NUMBA', 'SHEAR'],

# # gwsnr package arguments

# mtot_min = 2.0,

# mtot_max = 184.98599853446768,

# ratio_min = 0.1,

# ratio_max = 1.0,

# mtot_resolution = 500,

# ratio_resolution = 50,

# sampling_frequency = 2048.0,

# waveform_approximant = 'IMRPhenomD',

# minimum_frequency = 20.0,

# snr_type = 'interpolation',

# psds = {'L1':'aLIGO_O4_high_asd.txt','H1':'aLIGO_O4_high_asd.txt', 'V1':'AdV_asd.txt', 'K1':'KAGRA_design_asd.txt'},

# ifos = ['L1', 'H1', 'V1'],

# interpolator_dir = './interpolator_pickle',

# gwsnr_verbose = False,

# multiprocessing_verbose = True,

# mtot_cut = True,

# # common arguments, to generate interpolator

# create_new_interpolator = dict(

# redshift_distribution=dict(create_new=False, resolution=1000),

# z_to_luminosity_distance=dict(create_new=False, resolution=1000),

# velocity_dispersion=dict(create_new=False, resolution=1000),

# axis_ratio=dict(create_new=False, resolution=1000),

# optical_depth=dict(create_new=False, resolution=200),

# z_to_Dc=dict(create_new=False, resolution=1000),

# Dc_to_z=dict(create_new=False, resolution=1000),

# angular_diameter_distance=dict(create_new=False, resolution=1000),

# differential_comoving_volume=dict(create_new=False, resolution=1000),

# Dl_to_z=dict(create_new=False, resolution=1000),

# )

# )

Defining custom functions and parameters.

As an example, I will change,

merger_rate_density_params’s default value of local merger rate density (\(R_0\)) to \(38.8\times 10^{-9} Mpc^{-3} yr^{-1}\) (upper limit of GWTC-3). But, I am still using the default merger_rate_density function, which is

'merger_rate_density_bbh_popI_II_oguri2018'. Note that the accepted \(R_0\) value in GWTC-3 is \(23.9_{-8.6}^{+14.9}\times 10^{-9} \; Mpc^{-3} yr^{-1}\).source_frame_masses to a custom function. This is similar to the internal default function, i.e.

PowerLaw+Peak model. I am usinggwcosmo’spowerlaw_gaussianprior for this example.optical depth for strong lensing condition and velocity dispersion of the lensing galaxy to SIS model and gamma function respectively. The default optical depth is that of the SIE model and default velocity dispersion has additional redshift dependence. Note the minimum and maximum values of the velocity dispersion. Default is \([10, 350] \; km/s\).

gwsnrparameters: By default, it uses IMRPhenomD waveform model with no spin. It uses interpolation method to find the ‘snr’ and it is super fast. But for the example below, I am using IMRPhenomXPHM with precessing spins. This is without interpolation but through inner product method, like that ofbilbybut with njit functions and multiprocessing. It will be slower as compare to interpolation method but faster than that of bilby.

Note: All custom functions sampler should have ‘size’ as the only input.

[23]:

from gwcosmo import priors as p

# define your custom function of mass_1_source and mass_2_source calculation

# it should have 'size' as the only argument

def powerlaw_peak(size):

"""

Function to sample mass1 and mass2 from a powerlaw with a gaussian peak

Parameters

----------

size : `int`

Number of samples to draw

Returns

-------

mass_1_source : `numpy.ndarray`

Array of mass1 samples

mass_2_source : `numpy.ndarray`

Array of mass2 samples

"""

# below is the gwcosmo default values

mminbh=4.98 # Minimum mass of the black hole (Msun)

mmaxbh=86.22 # Maximum mass of the black hole (Msun)

alpha=2.63 # Spectral index for the powerlaw of the primary mass distribution

mu_g=33.07 # Mean of the Gaussian component in the primary mass distribution

sigma_g=5.69 # Width of the Gaussian component in the primary mass distribution

lambda_peak=0.10 # Fraction of the model in the Gaussian component

delta_m=4.82 # Range of mass tapering on the lower end of the mass distribution

beta=1.26 # Spectral index for the powerlaw of the mass ratio distribution

model = p.BBH_powerlaw_gaussian(

mminbh=mminbh,

mmaxbh=mmaxbh,

alpha=alpha,

mu_g=mu_g,

sigma_g=sigma_g,

lambda_peak=lambda_peak,

delta_m=delta_m,

beta=beta,

)

# sample mass1 and mass2

mass_1_source, mass_2_source = model.sample(Nsample=size)

return (mass_1_source, mass_2_source)

LeR initialization with custom functions and parameters

Initialize the class with the custom function

changing ler input params

[24]:

from ler.rates import LeR

import numpy as np

ler = LeR(npool=4, verbose=False,

# for source parameters

source_priors=dict(

merger_rate_density='merger_rate_density_bbh_popI_II_oguri2018',

source_frame_masses=powerlaw_peak,

),

source_priors_params=dict(

merger_rate_density=dict(

R0=38.8e-09,

b2=1.6,

b3=2.0,

b4=30

),

source_frame_masses=None,

),

# for lens parameters

lens_functions=dict(

strong_lensing_condition="rjs_with_cross_section_SIS",

optical_depth="optical_depth_SIS_haris",

),

lens_priors=dict(

velocity_dispersion="velocity_dispersion_gengamma",

),

lens_priors_params=dict(

velocity_dispersion=dict(a=2.32 / 2.67, c=2.67)

),

# for snr generation

waveform_approximant = 'IMRPhenomXPHM',

snr_type='inner_product',

spin_zero=False,

spin_precession=True,

)

since I am using inner product to calculate snr, it will take longer time to simulate the events.

You can increase the speed by allocating more CPU cores to the code. For example, if you have 8 logical cores, set npool>4

Sampling (Unlensed).

[25]:

ler.batch_size = 25000

# increase size for accurate rate calculation

# for Apple silicon M2 pro 16GB RAM, npool=4, time taken is 1m 44.8s

ler.unlensed_cbc_statistics(size=50000, resume=False, output_jsonfile = 'new_unlensed_params.json');

unlensed params will be store in ./ler_data/new_unlensed_params.json

chosen batch size = 25000 with total size = 50000

There will be 2 batche(s)

Batch no. 1

sampling gw source params...

calculating snrs...

solving SNR with inner product

100%|████████████████████████████████████████████████████████| 20250/20250 [00:51<00:00, 396.70it/s]

Batch no. 2

sampling gw source params...

calculating snrs...

solving SNR with inner product

100%|████████████████████████████████████████████████████████| 20344/20344 [00:51<00:00, 391.53it/s]

saving all unlensed parameters in ./ler_data/new_unlensed_params.json

Sampling (Lensed).

[26]:

# increase the size if you need accurate rate calculation

# for Apple silicon M2 pro 16GB RAM, npool=4, time taken is

ler.lensed_cbc_statistics(size=50000, resume=False, output_jsonfile = 'new_lensed_params.json');

lensed params will be store in ./ler_data/new_lensed_params.json

chosen batch size = 25000 with total size = 50000

There will be 2 batche(s)

Batch no. 1

sampling lensed params...

solving lens equations...

100%|███████████████████████████████████████████████████████| 25000/25000 [00:06<00:00, 3965.92it/s]

Invalid sample found. Resampling 1 lensed events...

solving lens equations...

100%|█████████████████████████████████████████████████████████████████| 1/1 [00:00<00:00, 11.49it/s]

calculating snrs...

solving SNR with inner product

100%|████████████████████████████████████████████████████████| 18026/18026 [00:46<00:00, 391.77it/s]

solving SNR with inner product

100%|████████████████████████████████████████████████████████| 18026/18026 [00:43<00:00, 410.05it/s]

solving SNR with inner product

100%|██████████████████████████████████████████████████████████| 2600/2600 [00:06<00:00, 410.74it/s]

solving SNR with inner product

100%|██████████████████████████████████████████████████████████| 2201/2201 [00:05<00:00, 405.64it/s]

Batch no. 2

sampling lensed params...

solving lens equations...

100%|███████████████████████████████████████████████████████| 25000/25000 [00:05<00:00, 4262.94it/s]

Invalid sample found. Resampling 2 lensed events...

solving lens equations...

100%|█████████████████████████████████████████████████████████████████| 2/2 [00:00<00:00, 24.85it/s]

calculating snrs...

solving SNR with inner product

100%|████████████████████████████████████████████████████████| 18070/18070 [00:43<00:00, 412.07it/s]

solving SNR with inner product

100%|████████████████████████████████████████████████████████| 18070/18070 [00:44<00:00, 409.45it/s]

solving SNR with inner product

100%|██████████████████████████████████████████████████████████| 2566/2566 [00:06<00:00, 414.08it/s]

solving SNR with inner product

100%|██████████████████████████████████████████████████████████| 2184/2184 [00:05<00:00, 413.02it/s]

saving all lensed parameters in ./ler_data/new_lensed_params.json

generate detectable events and compute the rate ratio

note: here no input params are provided, so it will track the json files generated above

For individual rate computation, use

ler.unlensed_rate();ler.lensed_rate();

Rate calculation and comparison.

[28]:

# you might not need to provide the json file names

# at the end of each sampling, the json file names will be updated in the ler_params.json file. The default json file names are call from the ler_params.json file.

rate_ratio, unlensed_param_detectable, lensed_param_detectable =ler.rate_comparison_with_rate_calculation(

unlensed_param='new_unlensed_params.json',

snr_threshold_unlensed=8.0,

lensed_param='new_lensed_params.json',

output_jsonfile_unlensed='new_unlensed_params_detectable.json',

output_jsonfile_lensed='new_lensed_params_detectable.json',

snr_threshold_lensed=[8.0,8.0],

num_img=[1,1],

)

Getting unlensed_param from json file ./ler_data/new_unlensed_params.json...

given detectability_condition == 'step_function'

total unlensed rate (yr^-1): 1522.6442174796687

number of simulated unlensed detectable events: 453

number of simulated all unlensed events: 50000

storing detectable params in ./ler_data/new_unlensed_params_detectable.json

Getting lensed_param from json file ./ler_data/new_lensed_params.json...

given detectability_condition == step_function

total lensed rate (yr^-1): 1.1059902347300807

number of simulated lensed detectable events: 337

number of simulated all lensed events: 50000

storing detectable params in ./ler_data/new_lensed_params_detectable.json

unlensed_rate: 1522.6442174796687

lensed_rate: 1.1059902347300807

ratio: 1376.7248296286027

Important Note: * input parameters, snr_threshold_lensed=[8.0,8.0], num_img=[1,1], means that two of the images should have snr>8.0. You can also set: snr_threshold_lensed=8, num_img=2

Similarly, if snr_threshold_lensed=[8.0,6.0], num_img=[2,2], it means that two of the images should have snr>8.0 and other two images should have snr>6.0. But in this case, even though the simulated lensed (detectable) events are correct, the rate calculation will not be right as the strong lensing condition was set for 2 image case.

Uning available model functions

How to look for available model functions?

All available names are stored as a dict in

lerinstancethe keys of this dict shows the parameter type

the values are also dict, where the keys are the model function names and the values are their input parameters

[29]:

# for unlensed case

print(ler.available_gw_prior_list_and_its_params['source_frame_masses'])

# for lensed case

print(ler.available_lens_prior_list_and_its_params['velocity_dispersion'])

{'binary_masses_BBH_popI_II_powerlaw_gaussian': {'mminbh': 4.98, 'mmaxbh': 112.5, 'alpha': 3.78, 'mu_g': 32.27, 'sigma_g': 3.88, 'lambda_peak': 0.03, 'delta_m': 4.8, 'beta': 0.81}, 'binary_masses_BBH_popIII_lognormal': {'Mc': 30.0, 'sigma': 0.3, 'beta': 1.1}, 'binary_masses_BBH_primordial_lognormal': {'Mc': 30.0, 'sigma': 0.3, 'beta': 1.1}, 'binary_masses_BNS_gwcosmo': {'mminns': 1.0, 'mmaxns': 3.0, 'alphans': 0.0}, 'binary_masses_BNS_bimodal': {'w': 0.643, 'muL': 1.352, 'sigmaL': 0.08, 'muR': 1.88, 'sigmaR': 0.3, 'mmin': 1.0, 'mmax': 2.3}}

{'velocity_dispersion_haris': {'a': 0.8689138576779026, 'c': 2.67}, 'velocity_dispersion_gengamma': {'a': 0.8689138576779026, 'c': 2.67}, 'velocity_dispersion_bernardi': None, 'velocity_dispersion_ewoud': None}

for looking at the choosen models and its input parameters

[30]:

# for unlensed case

print(ler.gw_param_samplers)

print(ler.gw_param_samplers_params)

# for lensed case

print(ler.lens_param_samplers)

print(ler.lens_param_samplers_params)

{'merger_rate_density': 'merger_rate_density_bbh_popI_II_oguri2018', 'source_frame_masses': <function powerlaw_peak at 0x32f3ffd90>, 'zs': 'sample_source_redshift', 'geocent_time': 'sampler_uniform', 'ra': 'sampler_uniform', 'dec': 'sampler_cosine', 'phase': 'sampler_uniform', 'psi': 'sampler_uniform', 'theta_jn': 'sampler_sine', 'a_1': 'sampler_uniform', 'a_2': 'sampler_uniform', 'tilt_1': 'sampler_sine', 'tilt_2': 'sampler_sine', 'phi_12': 'sampler_uniform', 'phi_jl': 'sampler_uniform'}

{'merger_rate_density': {'R0': 3.88e-08, 'b2': 1.6, 'b3': 2.0, 'b4': 30}, 'source_frame_masses': None, 'zs': None, 'geocent_time': {'min_': 1238166018, 'max_': 1269702018}, 'ra': {'min_': 0.0, 'max_': 6.283185307179586}, 'dec': None, 'phase': {'min_': 0.0, 'max_': 6.283185307179586}, 'psi': {'min_': 0.0, 'max_': 3.141592653589793}, 'theta_jn': None, 'a_1': {'min_': 0.0, 'max_': 0.8}, 'a_2': {'min_': 0.0, 'max_': 0.8}, 'tilt_1': None, 'tilt_2': None, 'phi_12': {'min_': 0, 'max_': 6.283185307179586}, 'phi_jl': {'min_': 0, 'max_': 6.283185307179586}}

{'source_redshift_sl': 'strongly_lensed_source_redshifts', 'lens_redshift': 'lens_redshift_SDSS_catalogue', 'velocity_dispersion': 'velocity_dispersion_gengamma', 'axis_ratio': 'axis_ratio_rayleigh', 'axis_rotation_angle': 'axis_rotation_angle_uniform', 'external_shear': 'shear_norm', 'density_profile_slope': 'density_profile_slope_normal', 'source_parameters': 'sample_gw_parameters'}

{'source_redshift_sl': None, 'lens_redshift': None, 'velocity_dispersion': {'a': 0.8689138576779026, 'c': 2.67}, 'axis_ratio': {'q_min': 0.2, 'q_max': 1.0}, 'axis_rotation_angle': {'phi_min': 0.0, 'phi_max': 6.283185307179586}, 'external_shear': {'scale': 0.05}, 'density_profile_slope': {'mean': 2.0, 'std': 0.2}, 'source_parameters': None}

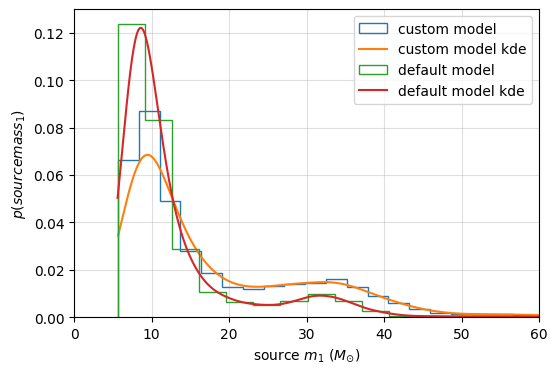

Comparison of mass distribution model (BBH, mass-1, larger mass only).

compare the default mass distribution with the custom mass distribution function defined on the previous example link.

[31]:

# calling the default mass distribution model

mass_1_source, mass_2_source = ler.binary_masses_BBH_popI_II_powerlaw_gaussian(size=10000)

default_model_dict = dict(mass_1_source=mass_1_source)

# calling the custom mass distribution model

mass_1_source, mass_2_source = powerlaw_peak(size=10000)

custom_model_dict = dict(mass_1_source=mass_1_source)

[32]:

import matplotlib.pyplot as plt

from ler.utils import plots as lerplt

# let's do a comparision plot between you custom model and the default model

plt.figure(figsize=(6, 4))

lerplt.param_plot(

param_name="mass_1_source",

param_dict=custom_model_dict, # or the json file name

plot_label='custom model',

);

lerplt.param_plot(

param_name="mass_1_source",

param_dict=default_model_dict,

plot_label='default model',

);

plt.xlabel(r'source $m_1$ ($M_{\odot}$)')

plt.ylabel(r'$p(source mass_1)$')

plt.xlim(0,60)

plt.grid(alpha=0.4)

plt.show()

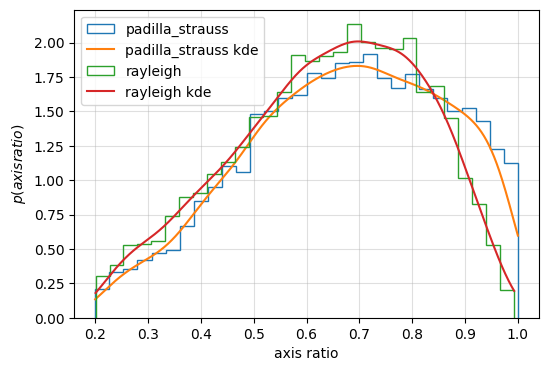

Comparison of Axis-ratio model of the lensing galaxy.

compare the default axis-ratio distribution (gengamma, from SDSS galaxy catalogue, Haris et al. 2018) with axis-ratio distribution from Padilla and Strauss 2008

[28]:

size = 10000

padilla_strauss = ler.axis_ratio_padilla_strauss(size=size)

# axis_ratio_rayleigh depends on the velocity dispersion

sigma = ler.velocity_dispersion_gengamma(size=size)

rayleigh = ler.axis_ratio_rayleigh(sigma=sigma)

# make a dict

axis_ratio_dict = dict(

padilla_strauss=padilla_strauss,

rayleigh=rayleigh,

)

axis_ratio_spline_coeff interpolator will be generated at ./interpolator_pickle/axis_ratio/axis_ratio_spline_coeff_0.pickle

axis_ratio interpolator will be generated at ./interpolator_pickle/axis_ratio/axis_ratio_1.pickle

[29]:

# plot the distribution of axis-ratio

plt.figure(figsize=(6, 4))

lerplt.param_plot(

param_name="padilla_strauss",

param_dict=axis_ratio_dict,

plot_label='padilla_strauss',

)

lerplt.param_plot(

param_name="rayleigh",

param_dict=axis_ratio_dict,

plot_label='rayleigh',

)

plt.xlabel(r'axis ratio')

plt.ylabel(r'$p(axis ratio)$')

plt.grid(alpha=0.4)

plt.show()

Generating particular number of detectable events.

this is particularly useful when you want only the detectable events to be saved in the json file

detectable event rates will be calculated at each batches. Subsequent batch will consider the previous batch’s detectable events. So, the rates will become more accurate as the batch number increases and will converge to a stable value at higher samples.

you can resume the rate calculation from the last saved batch.

[33]:

from ler.rates import LeR

# class initialization

ler = LeR(npool=8, verbose=False)

Unlensed case.

SNR>8

[34]:

n_size_unlensed_param = ler.selecting_n_unlensed_detectable_events(

size=5000,

snr_threshold=10.0,

batch_size=100000,

resume=False,

output_jsonfile='unlensed_params_n_detectable.json',

meta_data_file="meta_unlensed.json",

)

removing ./ler_data/unlensed_params_n_detectable.json and ./ler_data/meta_unlensed.json if they exist

collected number of detectable events = 0

given detectability_condition == 'step_function'

collected number of detectable events = 422

total number of events = 100000

total rate (yr^-1): 436.86662367311965

given detectability_condition == 'step_function'

collected number of detectable events = 841

total number of events = 200000

total rate (yr^-1): 435.31378022404454

given detectability_condition == 'step_function'

collected number of detectable events = 1255

total number of events = 300000

total rate (yr^-1): 433.0707841309361

given detectability_condition == 'step_function'

collected number of detectable events = 1705

total number of events = 400000

total rate (yr^-1): 441.2663467788323

given detectability_condition == 'step_function'

collected number of detectable events = 2094

total number of events = 500000

total rate (yr^-1): 433.5538909817594

given detectability_condition == 'step_function'

collected number of detectable events = 2503

total number of events = 600000

total rate (yr^-1): 431.8630170038777

given detectability_condition == 'step_function'

collected number of detectable events = 2916

total number of events = 700000

total rate (yr^-1): 431.24680928599076

given detectability_condition == 'step_function'

collected number of detectable events = 3325

total number of events = 800000

total rate (yr^-1): 430.2670390145505

given detectability_condition == 'step_function'

collected number of detectable events = 3740

total number of events = 900000

total rate (yr^-1): 430.1951481141304

given detectability_condition == 'step_function'

collected number of detectable events = 4138

total number of events = 1000000

total rate (yr^-1): 428.3777461515092

given detectability_condition == 'step_function'

collected number of detectable events = 4561

total number of events = 1100000

total rate (yr^-1): 429.2435740140238

given detectability_condition == 'step_function'

collected number of detectable events = 4979

total number of events = 1200000

total rate (yr^-1): 429.53375183026515

given detectability_condition == 'step_function'

collected number of detectable events = 5385

total number of events = 1300000

total rate (yr^-1): 428.8236909368846

stored detectable unlensed params in ./ler_data/unlensed_params_n_detectable.json

stored meta data in ./ler_data/meta_unlensed.json

trmming final result to size=5000

collected number of detectable events = 5000

total number of events = 1207057.0

total unlensed event rate (yr^-1): 428.8235626196801

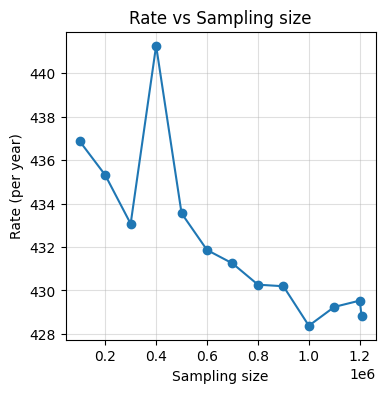

Important Note: At each iteration, rate is calculated using the cummulatively increasing number of events. It will become stable at around 2 million events. This is the number of events that is required to get a stable rate. Below, I am showing visualization of how rate changes with increasing number of events.

[35]:

from ler.utils import get_param_from_json

# getting data from json

meta_data= get_param_from_json("./ler_data/meta_unlensed.json")

# plot the rate vs sampling size

plt.figure(figsize=(4,4))

plt.plot(meta_data['events_total'], meta_data['total_rate'], 'o-')

plt.xlabel('Sampling size')

plt.ylabel('Rate (per year)')

plt.title('Rate vs Sampling size')

plt.grid(alpha=0.4)

plt.show()

Lensed case.

2 images, snr>8 (super-threshold)

1 image, snr>6 (sub+super-threshold)

[36]:

n_size_lensed_param = ler.selecting_n_lensed_detectable_events(

size=500,

snr_threshold=[8.0,6.0],

num_img=[2,1],

batch_size=50000,

resume=False,

output_jsonfile='lensed_params_n_detectable.json',

meta_data_file="meta_lensed.json",

)

removing ./ler_data/lensed_params_n_detectable.json and ./ler_data/meta_lensed.json if they exist

collected number of detectable events = 0

100%|███████████████████████████████████████████████████████| 50000/50000 [00:11<00:00, 4299.84it/s]

100%|█████████████████████████████████████████████████████████████████| 1/1 [00:00<00:00, 12.57it/s]

given detectability_condition == step_function

collected number of detectable events = 112

total number of events = 50000

total rate (yr^-1): 0.508493641457471

100%|███████████████████████████████████████████████████████| 50000/50000 [00:11<00:00, 4296.83it/s]

100%|█████████████████████████████████████████████████████████████████| 1/1 [00:00<00:00, 11.66it/s]

given detectability_condition == step_function

collected number of detectable events = 212

total number of events = 100000

total rate (yr^-1): 0.48125291066510645

100%|███████████████████████████████████████████████████████| 50000/50000 [00:12<00:00, 4094.12it/s]

100%|█████████████████████████████████████████████████████████████████| 3/3 [00:00<00:00, 38.02it/s]

given detectability_condition == step_function

collected number of detectable events = 317

total number of events = 150000

total rate (yr^-1): 0.4797395367321973

100%|███████████████████████████████████████████████████████| 50000/50000 [00:11<00:00, 4289.40it/s]

given detectability_condition == step_function

collected number of detectable events = 433

total number of events = 200000

total rate (yr^-1): 0.4914681847122432

100%|███████████████████████████████████████████████████████| 50000/50000 [00:11<00:00, 4318.64it/s]

100%|█████████████████████████████████████████████████████████████████| 1/1 [00:00<00:00, 12.09it/s]

given detectability_condition == step_function

collected number of detectable events = 531

total number of events = 250000

total rate (yr^-1): 0.48216093502485197

storing detectable lensed params in ./ler_data/lensed_params_n_detectable.json

storing meta data in ./ler_data/meta_lensed.json

trmming final result to size=500

collected number of detectable events = 500

total number of events = 235405.0

total lensed event rate (yr^-1): 0.4821607228741339

[37]:

print(n_size_lensed_param.keys())

print(f"size of each parameters={len(n_size_lensed_param['zl'])}")

dict_keys(['zl', 'zs', 'sigma', 'q', 'theta_E', 'phi', 'e1', 'e2', 'gamma1', 'gamma2', 'gamma', 'geocent_time', 'ra', 'dec', 'phase', 'psi', 'theta_jn', 'luminosity_distance', 'mass_1_source', 'mass_2_source', 'mass_1', 'mass_2', 'x0_image_positions', 'x1_image_positions', 'magnifications', 'time_delays', 'image_type', 'n_images', 'effective_luminosity_distance', 'effective_geocent_time', 'snr_net', 'L1', 'H1', 'V1'])

size of each parameters=500

Using custom detection criteria.

I leverage the ANN (Artificial Neural Network) based SNR calculator from gwsnr. It can predict SNR>8 with 99.9% accuracy for the astrophysical parameters. But to make it 100% accurate, I will recalculate SNR some of the events using inner product method. LeR can do this automatically.

I will test two cases using:

pdet (probability of detection) with ANN

SNR with ANN.

Note: Check out GRB rate calculation example for more details on how to use custom detection criteria.

[38]:

from ler.rates import LeR

from gwsnr import GWSNR

import numpy as np

Defining custom detection criteria (using gwsnr package).

pdet only calculation

[39]:

snr_ = GWSNR(gwsnr_verbose=True, pdet=True, snr_type='ann', waveform_approximant='IMRPhenomXPHM')

psds not given. Choosing bilby's default psds

Intel processor has trouble allocating memory when the data is huge. So, by default for IMRPhenomXPHM, duration_max = 64.0. Otherwise, set to some max value like duration_max = 600.0 (10 mins)

Interpolator will be loaded for L1 detector from ./interpolator_pickle/L1/partialSNR_dict_1.pickle

Interpolator will be loaded for H1 detector from ./interpolator_pickle/H1/partialSNR_dict_1.pickle

Interpolator will be loaded for V1 detector from ./interpolator_pickle/V1/partialSNR_dict_1.pickle

Chosen GWSNR initialization parameters:

npool: 4

snr type: ann

waveform approximant: IMRPhenomXPHM

sampling frequency: 2048.0

minimum frequency (fmin): 20.0

mtot=mass1+mass2

min(mtot): 2.0

max(mtot) (with the given fmin=20.0): 184.98599853446768

detectors: ['L1', 'H1', 'V1']

psds: [PowerSpectralDensity(psd_file='None', asd_file='/Users/phurailatpamhemantakumar/anaconda3/envs/ler/lib/python3.10/site-packages/bilby/gw/detector/noise_curves/aLIGO_O4_high_asd.txt'), PowerSpectralDensity(psd_file='None', asd_file='/Users/phurailatpamhemantakumar/anaconda3/envs/ler/lib/python3.10/site-packages/bilby/gw/detector/noise_curves/aLIGO_O4_high_asd.txt'), PowerSpectralDensity(psd_file='None', asd_file='/Users/phurailatpamhemantakumar/anaconda3/envs/ler/lib/python3.10/site-packages/bilby/gw/detector/noise_curves/AdV_asd.txt')]

Testing the custom detection criteria.

Pdet calculator test

[40]:

# initialization pdet calculator

pdet_calculator = snr_.snr

mass_1 = np.array([5, 10.,50.,200.])

ratio = np.array([1, 0.8,0.5,0.2])

luminosity_distance = np.array([1000, 2000, 3000, 4000])

# test

pdet = pdet_calculator(

gw_param_dict=dict(

mass_1=mass_1,

mass_2=mass_1*ratio,

luminosity_distance=luminosity_distance,

)

)

inner_product_snr = snr_.compute_bilby_snr(

mass_1=mass_1,

mass_2=mass_1*ratio,

luminosity_distance=luminosity_distance,

)

print(f"pdet: {pdet}")

print(f"inner_product_snr: {inner_product_snr}")

100%|█████████████████████████████████████████████████████████████████| 3/3 [00:00<00:00, 16.74it/s]

pdet: {'L1': array([0, 0, 1, 0]), 'H1': array([0, 0, 0, 0]), 'V1': array([0, 0, 0, 0]), 'pdet_net': array([1, 0, 1, 0])}

inner_product_snr: {'L1': array([ 7.4441411 , 5.85368864, 10.6450267 , 0. ]), 'H1': array([4.73471203, 3.72313335, 6.77057773, 0. ]), 'V1': array([2.23257635, 1.73563894, 3.21070705, 0. ]), 'snr_net': array([ 9.10039185, 7.15121214, 13.01790904, 0. ])}

Using custom detection criteria with LeR.

Below is an example of general case of initialising with any type of pdet calculator.

Refer to the documentation example for extra details, where I have used pdet for GRB (gamma-ray-burst) detection.

[41]:

from ler.rates import LeR

ler = LeR(verbose=False, pdet_finder=pdet_calculator, spin_zero=False,

spin_precession=True)

[42]:

unlensed_param = ler.unlensed_cbc_statistics();

unlensed params will be store in ./ler_data/unlensed_param.json

chosen batch size = 50000 with total size = 100000

There will be 2 batche(s)

Batch no. 1

sampling gw source params...

calculating pdet...

Batch no. 2

sampling gw source params...

calculating pdet...

saving all unlensed parameters in ./ler_data/unlensed_param.json

now calculate rate using the custom ‘pdet’ calculator

[43]:

_, unlensed_param_detectable = ler.unlensed_rate(detectability_condition='pdet')

Getting unlensed_param from json file ./ler_data/unlensed_param.json...

given detectability_condition == 'pdet'

total unlensed rate (yr^-1): 502.08604853427255

number of simulated unlensed detectable events: 485

number of simulated all unlensed events: 100000

storing detectable params in ./ler_data/unlensed_param_detectable.json

[44]:

# # for lensed case

# lensed_param = ler.lensed_cbc_statistics()

# _, lensed_param_detectable = ler.lensed_rate(detectability_condition='pdet')

Analysis: SNR (with ANN) + SNR recalculation (inner product).

[45]:

from ler.rates import LeR

# ler initialization with gwsnr arguments

ler = LeR(npool=6,

verbose=False,

snr_type='ann',

waveform_approximant='IMRPhenomXPHM',

spin_zero=False,

spin_precession=True

)

[46]:

unlensed_param = ler.selecting_n_unlensed_detectable_events(

size=500,

snr_threshold=10.0,

batch_size=50000,

resume=False,

trim_to_size=False,

output_jsonfile='unlensed_params_n_detectable_ann.json',

meta_data_file="meta_unlensed_ann.json",

snr_recalculation=True,

snr_threshold_recalculation=[4,20], # it will recalculate SNR for events with (SNR_ANN > 4) and (SNR_ANN < 20)

)

removing ./ler_data/unlensed_params_n_detectable_ann.json and ./ler_data/meta_unlensed_ann.json if they exist

collected number of detectable events = 0

100%|██████████████████████████████████████████████████████████| 1598/1598 [00:04<00:00, 351.02it/s]

given detectability_condition == 'step_function'

collected number of detectable events = 204

total number of events = 50000

total rate (yr^-1): 422.373418148419

100%|██████████████████████████████████████████████████████████| 1557/1557 [00:04<00:00, 344.99it/s]

given detectability_condition == 'step_function'

collected number of detectable events = 425

total number of events = 100000

total rate (yr^-1): 439.97231057126976

100%|██████████████████████████████████████████████████████████| 1503/1503 [00:04<00:00, 345.51it/s]

given detectability_condition == 'step_function'

collected number of detectable events = 604

total number of events = 150000

total rate (yr^-1): 416.85219699615203

stored detectable unlensed params in ./ler_data/unlensed_params_n_detectable_ann.json

stored meta data in ./ler_data/meta_unlensed_ann.json

[47]:

# # Uncomment the below code if you need to compare with the rate calculation using inner product snr

from ler.rates import LeR

# # ler initialization with gwsnr arguments

# ler = LeR(npool=6, verbose=False, snr_type='inner_product', waveform_approximant='IMRPhenomXPHM', spin_zero=False,

# spin_precession=True)

# unlensed_param = ler.selecting_n_unlensed_detectable_events(

# size=1000,

# snr_threshold=10.0,

# batch_size=50000,

# resume=False,

# output_jsonfile='unlensed_params_n_detectable.json',

# meta_data_file="meta_unlensed.json",

# )

[48]:

lensed_param = ler.selecting_n_lensed_detectable_events(

size=500,

snr_threshold=[8.0,8.0],

num_img=[1,1],

batch_size=50000,

resume=False,

output_jsonfile='lensed_params_n_detectable_ann.json',

meta_data_file="meta_lensed_ann.json",

snr_recalculation=True,

snr_threshold_recalculation=[[4,4], [20,20]], # it will recalculate SNR for events with (SNR_ANN > 4) and (SNR_ANN < 20)

)

removing ./ler_data/lensed_params_n_detectable_ann.json and ./ler_data/meta_lensed_ann.json if they exist

collected number of detectable events = 0

100%|███████████████████████████████████████████████████████| 50000/50000 [00:11<00:00, 4310.16it/s]

calculating snrs...

100%|██████████████████████████████████████████████████████████| 1538/1538 [00:04<00:00, 382.74it/s]

100%|██████████████████████████████████████████████████████████| 1538/1538 [00:03<00:00, 389.81it/s]

100%|██████████████████████████████████████████████████████████| 1084/1084 [00:02<00:00, 363.55it/s]

100%|████████████████████████████████████████████████████████████| 964/964 [00:02<00:00, 377.48it/s]

given detectability_condition == step_function

collected number of detectable events = 220

total number of events = 50000

total rate (yr^-1): 0.9988267957200324

100%|███████████████████████████████████████████████████████| 50000/50000 [00:11<00:00, 4299.16it/s]

calculating snrs...

100%|██████████████████████████████████████████████████████████| 1436/1436 [00:03<00:00, 387.69it/s]

100%|██████████████████████████████████████████████████████████| 1436/1436 [00:03<00:00, 389.49it/s]

100%|██████████████████████████████████████████████████████████| 1011/1011 [00:02<00:00, 375.16it/s]

100%|████████████████████████████████████████████████████████████| 884/884 [00:02<00:00, 375.81it/s]

given detectability_condition == step_function

collected number of detectable events = 430

total number of events = 100000

total rate (yr^-1): 0.9761261867263953

100%|███████████████████████████████████████████████████████| 50000/50000 [00:11<00:00, 4311.48it/s]

100%|█████████████████████████████████████████████████████████████████| 1/1 [00:00<00:00, 11.26it/s]

calculating snrs...

100%|██████████████████████████████████████████████████████████| 1525/1525 [00:03<00:00, 381.67it/s]

100%|██████████████████████████████████████████████████████████| 1525/1525 [00:03<00:00, 390.52it/s]

100%|██████████████████████████████████████████████████████████| 1066/1066 [00:02<00:00, 375.39it/s]

100%|████████████████████████████████████████████████████████████| 919/919 [00:02<00:00, 379.79it/s]

given detectability_condition == step_function

collected number of detectable events = 662

total number of events = 150000

total rate (yr^-1): 1.0018535435858507

storing detectable lensed params in ./ler_data/lensed_params_n_detectable_ann.json

storing meta data in ./ler_data/meta_lensed_ann.json

trmming final result to size=500

collected number of detectable events = 500

total number of events = 113293.0

total lensed event rate (yr^-1): 1.001853997759663

[49]:

ler.rate_ratio();

unlensed_rate: 416.85219699615203

lensed_rate: 1.001853997759663

ratio: 416.0807841544908

[ ]: